Last modified: 2019-03-04 14:52

Status: Released. Starter code and problem descriptions are ready to go!

Update 2019-03-04: For Problem 3 and ONLY Problem 3, you are now authorized to use your own feature transformation functions together with: your own Logistic Regression implementation the standard sklearn implementation of Logistic Regression

Due date: Wed. Mar 6 at 11:59PM EST.

Turn-in links:

- PDF report turned in to: https://www.gradescope.com/courses/33142/assignments/167622/

- ZIP file of source code turned in to: https://www.gradescope.com/courses/33142/assignments/163224/

- ZIP file of test-set predictions for Digit-8-vs-9 task (Problem 2) turned into: https://www.gradescope.com/courses/33142/assignments/161640/

- ZIP file of test-set predictions for Sneaker-vs-Sandal task (Problem 3) turned into: https://www.gradescope.com/courses/33142/assignments/161876/

Files to Turn In:

PDF report:

- Clear human-readable writeup (NOT a notebook export), answering the prompts across Problem 1, Problem 2, and Problem 3.

- This document will be manually graded according to our rubric

- Please: within Gradescope via the normal submission process, annotate for each subproblem exactly which page(s) are relevant. This will save your graders much time!

ZIP file of source code should contain:

- LRGradientDescent.py : Python code file

-

- Used in Problem 1 and Problem 2 and Problem 3

- COLLABORATORS.txt : a plain text file [example], containing

-

- Your full name

-

- Estimate the hours you spent on each of Problem 1, Problem 2, and Problem 3

-

- Names of any people you talked to for help (TAs, students, etc.). If none, write "No external help".

-

- Brief description of what content you sought help about (1-3 sentences)

ZIP file of test-set predictions for Digit-8-vs-9 (Problem 2) should contain one file:

- yproba1_test.txt : plain text file

-

- One line per test-set example in

data_digits_8_vs_9_noisy/x_test.csv

- One line per test-set example in

-

- Each line contains float probability that the relevant example should be classified as a positive example given its features

-

- Should be loadable into NumPy as a 1D array via this snippet:

np.loadtxt('yproba1_test.txt')

- Should be loadable into NumPy as a 1D array via this snippet:

-

- See instructions in Problem 2e below

ZIP file of test-set predictions for Sneaker-vs-Sandal (Problem 3) should contain one file:

- yproba1_test.txt : plain text file

-

- One line per test-set example in

data_sneaker_vs_sandal/x_test.csv

- One line per test-set example in

-

- Each line contains float probability that the relevant example should be classified as a positive example given its features

-

- Should be loadable into NumPy as a 1D array via this snippet:

np.loadtxt('yproba1_test.txt')

- Should be loadable into NumPy as a 1D array via this snippet:

-

- See instructions in Problem 3 below

Starter code:

See the project1 folder of the public assignments repo for this class:

https://github.com/tufts-ml-courses/comp135-19s-assignments/tree/master/project1

Jump to:

Implementing calc_loss method Implementing calc_grad method Implementing fit method Implementing predict_proba method Problem 1: Toy data Problem 2: MNIST digits 8 vs 9 Problem 3: Sneakers vs. sandals Rubric for Evaluating PDF Report

Background

Here, we briefly review the notation for describing the Logistic Regression classifier.

We wish to build a binary classifier that can be trained from a set of \(N\) examples of (feature vector \(x_n\), label \(y_n\)) pairs. Each feature vector is a length-\(F\) vector of real values \(x_n \in \mathbb{R}^F\). Each label is written as \(y_n \in \{0, 1\}\) when it is observed, or \(Y_n\) when treated as a random variable.

To simplify notation, we will write each feature vector as a bias-included vector \(\tilde{x}_n\), a vector of length \(F+1\) where the last entry is always 1.

In code, given a feature array x_NF, we will denote a "bias-included" \(\tilde{x}\) array as xbias_NG.

The parameter of our logistic regression model is a vector \(w\) of size \(G = F+1\):

Remember, the last entry of this weight vector is the bias coefficient.

In code, we'll write the \(G=F+1\)-length vector \(w\) as a 1D array w_G. We can access the bias via the Python code w_G[-1], (where -1 index means "grab the last entry").

Probabilistic Predictions

Probabilistic predictions are made in two steps: first apply the weights to a specific feature vector to obtain a real-valued scalar \(z_n \in \mathbb{R}\). Then, feed this real value through a logistic sigmoid to obtain a probability.

Recall that the logistic sigmoid function \(\sigma(\cdot)\) is defined via the following two equivalent expressions, which map a real-value \(z\) to a probability value between 0 and 1.

Remember that we might wish to use either one of these expressions, depending on \(z\)'s value, to achieve numerical stability and avoid producing inf or nan values. For more information, see:

- "Numerically stable sigmoid function" description from Tim Viera's blog

- Recent recitation materials: NumericalStableImplementation.ipynb

Optimization problem for model training

Given a training set \(\{ x_n, y_n \}_{n=1}^N\), we wish to find the weight vector \(w\) that solves the following minimization problem:

This is equivalent to the following objective, which frames the problem as``minimize negative log likelihood'':

We will write the total loss function we're trying to minimize as \(\mathcal{L}(w)\), where:

Why the funny \(\frac{1}{N \log 2}\) constant in front? Turns out, with this constant applied, we can interpret the loss function \(L(w)\) directly as an upper bound on the error rate of our classifier on the training data.

Optimization algorithm: Gradient descent

We will use first-order gradient descent to solve this optimization problem. We can initialize \(w\) to some valid value (whatever we like!), and then take small steps downhill towards smaller and smaller loss values until we converge to some fixed value. Remember, if we want to go downhill, we need to go in the opposite direction of the gradient/derivative, which points uphill (direction of increasing slope).

FYI, this optimization problem is convex. Thus, no matter the random initialization, gradient descent should converge to a global minimum if the step size is set properly.

Gradient of the total loss function:

We want to take the derivative of the total loss function \(\mathcal{L}(w)\) with respect to each entry \(w_f\) of the weight vector \(w\):

Here, the subscript \(f \in \{1, 2, \ldots G-1, G\}\) indexes all the entries of \(w\), including the bias coefficient.

Gradient of the L2 penalty term:

We use the following identity for the gradient of the L2 penalty term with respect to each entry \(w_f\) of the \(w\) vector:

Gradient of the likelihood term. We can write each example's log likelihood, denoted as \(J\), as a function of intermediate variable \(z_n = w^T \tilde{x}_n\):

Substituting in \(z_n = w^T \tilde{x}_n\), we recover an expression for the log likelihood \(J\) of example at index \(n\) as a function of our parameter vector \(w\):

We can then use the chain rule to compute the gradient of the log likelihood with respect to the weight vector entry for each feature index \(f\) (including the bias) as:

Interpretation: For non-bias weight coefficients indexed by \(f \in \{1, 2, \ldots F\}\), this gradient indicates that to maximize \(\mathcal{J}(w)\) for an example \(n\), we should adjust the value of weight \(w_f\) in the direction of \(\text{sign}(x_f)\) if \(y_n = 1\), or in the opposite direction if \(y_n = 0\). Second, the magnitude of adjustment needed is related to how close the predicted probability \(\sigma(z_n)\) is to the true \(y_n\). Larger gaps require larger adjustments.

For the bias coefficient at \(w_G\) (which is same as \(w_{F+1}\)), the update equation tells us that the bias' change should depend on if \(y_n\) is larger or smaller than \(\sigma(z_n)\).

Coding Tasks

Implementing predict_proba to compute predicted probabilities

Look at the predict_proba method inside the starter code that defines the LRGradientDescent class.

You'll need to write code inside this method so that you can do prediction with your learned logistic regression weights.

Hint: You'll need a numerically stable implementation of the logistic sigmoid function.

Implementing calc_loss to compute the loss function

Look at the calc_loss method inside the starter code that defines the LRGradientDescent class.

You need to fill in the provided calc_loss method to compute the loss function.

This function takes a weight vector \(w\), represented in code as w_G, a 1D NumPy array of size \(G = F+1\) (one entry for each of the F features plus one entry for the bias).

This function produces a scalar loss value \(L(w)\) on the provided dataset, equal to \(L(w)\) defined above.

Hint: You'll need a numerically stable implementation of log loss. We suggest looking at logsumexp.

Implementing calc_grad to compute the gradient of the loss

Look at the calc_grad method inside the starter code that defines the LRGradientDescent class.

You need to fill in the provided calc_grad method to compute the gradient.

This function takes a weight vector \(w\), represented in code as w_G, a 1D NumPy array of size \(G = F+1\) (one entry for each of the F features plus one entry for the bias).

This function produces a 1D array of size \(G\) representing the gradient of the training set loss with respect to the weights. Each entry \(f\) of the gradient should equal \(\frac{d}{d w_f} L(w)\) defined above.

Implementing fit to perform gradient descent

Look at the starter code that defines the LRGradientDescent class.

Fill in the remainder of the provided fit method to perform gradient descent for logistic regression.

Your fit method should meet the following specifications:

Req 1: Initialize \(w\) to the all zeros vector (this is the default already in the starter code).

Req 2: Take gradient steps using a constant step size (denoted in math as \(\eta\)) which you can set in code via the step_size attribute.

Req 3: After each step, print an update to standard out and record progress to internal trace variables.

If the self.verbose attribute is set to True, print a progress update like this:

iter 1/5000 loss 0.065231 avg_L1_norm_grad 0.000116 w[0] 0.000 bias 1.276

Within your fit method, call print() to display a progress update at the very beginning (before any updates occur) and after every update step. This update should display the step number, the current loss, and the mean of the absolute values of the current gradient vector (the averaged L1 norm). Luckily for you, this is done by the current starter code.

Also within your fit method, record these results to the internal attributes: trace_steps, trace_loss, trace_norm_of_grad, trace_w. These are all lists that should just be appended to after each step.

Req 4: After each step, check for divergence using the provided raise_error_if_diverging method.

We've provided this method in the starter code to sanity check your recent steps and verify you aren't hitting NaN or infinite values / seeing loss values increase too much.

Hint: It's possible that our divergence checker is a bit too strict, and may terminate runs that would have eventually converged. If it's giving you problems, feel free to handle errors another way.

Req 5: After each step, check for convergence using the provided check_convergence method.

There are three criteria that ALL should be satisfied:

- the gradient's norm is small (near zero, as it should be at an optima)

- recent loss function values have stabilized across last 100 iterations

- recent parameter values have stabilized across last 100 iterations

If all the convergence criteria are satisfied, the check_convergence call will return True and you can stop the algorithm early.

You should also halt iterations after a maximum of num_iterations steps, which should default to 10,000.

Problems to complete for report

Problem 1: Toy Data

First, test your implementation on a simple problem with 10 total examples in the training set. Each label is binary, and each feature vector is just one-dimensional. The data is:

- 5 negative-labeled examples with evenly-spaced \(x\) values between (-2, -1)

- 5 positive-labeled examples with evenly-spaced \(x\) values between (+1, +2)

You should set alpha=0.1 and use this toy dataset throughout Problem 1.

In your PDF report, provide complete figures and captions for each of the following prompts:

1a: Evidence that your implementation can compute the correct loss and gradient

We'll look specifically at the first entry of the weight vector, denoted \(w_1\) in math and w_G[0] in code. What happens to the loss and gradient as we vary \(w_1\) from -2 to +12? You can assume the bias (the second entry of \(w\)) is fixed to zero.

Create 2 side-by-side line plots:

- loss (y-axis) vs. \(w_1\) (x-axis)

- gradient of \(L\) with respect to \(w_1\) (y-axis) vs. \(w_1\) (x-axis)

You should evaluate loss and gradient using a dense grid of at least 100 possible \(w_1\) points between -2 and +12. That is, you'll consider a sequence of length-2 vectors like the one below as possible inputs to calc_loss and calc_grad:

[-2, 0], [-1.5, 0], [-1.0, 0], ... [+12, 0]

Visually inspect your plot. Does the loss trend make sense? Does the gradient look as expected? Justify your answers in the caption.

Also, by inspecting the loss plot, what is the approximate minimizing value \(w_1^*\) (within one or two decimal places)? Record this in your caption.

1b: Evidence that your implementation finds a (close-enough) optimal weight vector for this toy example.

Using this toy data, create an LRGradientDescent object with init_w_recipe='zeros' and call fit with an appropriate step size to run gradient descent. Verify convergence (or if it doesn't converge, adjust your step size accordingly).

Make a trace plot of the learned values of \(w_1\) and \(w_2\) (the bias for this 2D case) vs. iterations. Do you see the expected trend?

1c: Evidence that your implementation converges again from a different random initialization

Repeat 1b above with another instance of LRGradientDescent, this time initializing the weights from another location (use init_w_recipe='uniform_-1_to_1' when calling the constructor).

Make a trace plot of the learned values of \(w_1\) and \(w_2\) (the bias for this 2D case) vs. iterations. Do you see the expected trend?

Problem 2: Handwritten Digits Classifier: 8 vs. 9

We now consider building a classifier to distinguish images of handwritten digits, specifically the digit 8 from the digit 9. You'll use the \(x\) and \(y\) examples provided in CSV files located in the data_digits_8_vs_9 folder of the starter code. We extracted this data from the well-known MNIST dataset by LeCun, Cortes, and Burges. We have preprocessed lightly for usability as well as to make the problem slightly more difficult by adding a small amount of random noise to each image.

Each example \(i\) has features \(x_i\) representing the gray-scale pixel values of a 28x28 pixel image. Each pixel's feature value varies between 0.0. (black) and 1.0 (bright white), with shades of gray possible in between. We've reshaped and flattened each 28x28 image so that it has a feature vector \(x_i\) of size 784 (= 28 x 28). Each row of the x_train.csv file is one image.

Each example \(i\) has a binary label (\(y_i\)). Here, we've encoded the '8's as a 0 and '9's as a 1. Each row of the y_train.csv file is one image.

Your challenge is to build a logistic regression classifier to distinguish '8' from '9'.

In your PDF report, include the following sections:

2a: Evidence that your implementation of GD converges

First, we want to be sure we can train effectively on this digits data.

Load the full dataset (x_train.csv and y_train.csv), and split it further: use the first 2000 examples as a validation set, and the remainder as your training set.

Don't worry, the rows of the CSV file were created by random shuffling, so you don't need to do any shuffling yourself.

Now, apply your LRGradientDescent implementation to the training set by calling fit, using a moderate regularization strength \(\alpha = 10.0\) and setting `init_w_recipe='zeros'. You'll need to explore what step size to use.

Show 3 specific plots (ideally provided in a 1 row x 3 col single figure):

- line plot of loss vs. iteration (use the

trace_lossattribute) - line plot of the L1 norm of grad vs. iteration (use the

trace_L1_norm_gradattribute) - plot of specific weight vector values (y-axis) vs. iteration (use the

trace_wattribute). Include one line for the bias weight, and one line for the weight coefficient on feature 154.

In each plot, show one line for each of 3 possible step_size settings:

- a small value that almost converges

- a moderate value that definitely converges within 10000 iterations

- a large value that clearly converges faster than the moderate value

You'll have to explore values of step_size yourself to decide on the values to use.

Include a thorough caption for the figure describing what trends you observe (what should happen to the loss across all 3 lines? what actually happens?). Be sure to specify which specific step sizes are shown, any relevant information about other step sizes you tried, and which step size you recommend to use going forward. Did you find any step sizes that diverged?

2b: Model Selection Analysis with Cross-Validation

We'll now perform 3-fold cross-validation to select the best \(\alpha\) L2 penalty hyperparameter for our logistic regression model. Use the starter code file model_selection_via_cv.py.

Consider 5 possible values for L2 penalty strength \(\alpha\) (denoted as alpha in the code):

0.0001, 0.01, 1.0, 100.0, 10000.0

For each value of \(\alpha\), fit a fresh LRGradientDescent model to each of the K possible train/validation splits of your data (each split sets one fold aside as validation, with the remaining K-1 folds for training).

After training on each split, record the error rate on the validation set. Remember, error rate is the fraction of examples the classifier makes mistakes on. That is, error rate = 1 - accuracy.

Hint 2b (#1): Keep in mind that the step_size value needed at one value of alpha may not be effective at another value of alpha. You should try to get each run on each fold to converge, or at least run long enough until you're sure that some \(\alpha\) value is not the one you'd like to select based on this cross-validation estimation procedure. For example, if you first try some \(\alpha_1\) value with good validation error, and then next you try penalty strength \(\alpha_2\) and observe worse validation error than \(\alpha_1\) but lower training error after only 100 iterations, you could halt early and avoid training \(\alpha_2\) all the way to convergence (since you know you prefer \(\alpha_1\) for its generalization ability).

Hint 2b (#2): For this problem, we recommend increasing num_iterations from its default (10000) to a higher value of 30000. You shouldn't need to adjust any convergence tolerances (we didn't in our solutions), but you can if needed within reason.

Make a figure showing a line plot of the validation-set error rate (y-axis) vs \(\alpha\) (x-axis). At each value of \(\alpha\), you should show the error rate averaged across the K folds.

Updated Prompt: Include a thorough caption for the figure describing the trends you observe (any underfitting? any overfitting?). Include text describing your step size selection strategy, and discuss any trends you observed in which step size values resulted in reliable convergence as \(\alpha\) increased.

Be sure to specify which \(\alpha\) value you recommend based on the plot.

2c: Analysis of Mistakes

Using the best \(\alpha\) value from 2b, load the model that corresponds to the first fold.

In your report, print the confusion matrix for this model on its validation set.

In your report, include one plot of 9 example images from the validation set that were scored as false positives. Include another plot of up to 9 example images that were scored as false negatives.

Plot each image using matplotlib's imshow, using the 'Grey' colormap with vmin=0 and vmax=1.0.

Write a short description of what you notice. What kinds of mistakes is the classifier making?

2d: Analysis of Learned Weights

For the same model you studied in 2c, take its 784 learned weight coefficients, and reshape them into a 28 x 28 image.

Plot this image using matplotlib's imshow, using the 'RdYlBu' colormap with vmin=-0.5 and vmax=0.5.

Write a short description of what you notice. What pixels are associated with a digit '8' (have negative weights)? Which pixels are associated with the digit '9' (have positive weights)?

Bonus: Show the same visualization with 2 additional learned weight vectors: one with \(\alpha\) too small and one with \(\alpha\) too large.

2e: Report heldout performance

For the single specific \(\alpha\) value you selected via cross-validation in 2b, train a logistic regression model on the entire provided training dataset (e.g. use all of x_train.csv and all of y_train.csv as your training set).

Then, use this model to produce predicted probabilities on the test set.

You should save these probabilities to a plain text file named yproba1_test.txt, with one line per example. Each line of the file should provide the numerical probability that the corresponding example should be labeled with the positive binary label \(y=1\).

To make your plain-text predicted probability file, you can use code like this:

x_test_NF = np.loadtxt('data_digits_8_vs_9_noisy/x_test.csv')

yproba1_test_N = model.predict_proba(x_test_NF)[:, 1]

np.savetxt('yproba1_test.txt', yproba1_test_N)

To make our automatic scoring possible, your prediction file's name must exactly match "yproba1_test.txt".

Package your prediction file up in a ZIP file, and submit to the Digits 8 vs 9 Heldout Test Evaluation with Leaderboard.

Record the resulting test-set error rate performance (provided by the autograder on Gradescope) in your report.

How well did the 3-fold CV estimate of error rate from 2b match the performance?

Problem 3: Open-ended Analysis of Sneaker vs Sandal

Let's consider another image classification problem: sneakers vs. sandals.

Each input image has the same \(x\) feature representation as the MNIST digits problem above (each example is a 28x28 image, which is reshaped into a 784-dimensional vector). However, now we have images from two classes: sneakers and sandals. These are from the larger Fashion MNIST dataset, made public originally by Zalando Research.

Now, we'd like you to build a logistic regression classifier that works on this new task. Your challenge is open-ended: you're not restricted to using the 784 pixel values only. You are free to use ANY feature transform you can think of to turn the image into a feature vector. Can you achieve an error rate that reaches the top of the leaderboard?

Code Restrictions

You can use our provided LRGradientDescentWithFeatureTransform.py starter code, or you can feel free to use code of your own design based on LRGradientDescent.py. Do not edit LRGradientDescent.py itself, you need to submit this exact file to the autograder to prove how you've solved Problem 1 and Problem 2.

You can swap out the provided starter-code feature transforms with anything, provided that your classifier must be a logistic regression model trained via an implementation of gradient descent.

You cannot call any external classifier code from sklearn or any other library. Thus, import statements like import sklearn.linear_model are NOT allowed.

Update 03/04: To facilitate more people completing this problem, you can use sklearn's implementation of LogisticRegression for Problem 3 and ONLY Problem 3. Be aware though, that you'll still need to do the interesting part (designing new transformed features) yourself.

Data Usage Restrictions

You should not use any additional Fashion MNIST data from other sources, only that provided for you in the starter code.

Ideas for better features

Be creative! If you're stuck, here are a few ideas to consider:

-

does the total number of pixels that are "on" help distinguish sandals from sneakers?

-

how can you identify when multiple pixels are on (bright white) simultanenously?

-

how can you capture spatial trends across pixels that are relevant to the sneaker vs. sandal problem?

Other ideas for improving your model

-

explore data augmentation (can you augment your existing training set by transforming existing images in helpful ways? for example, if you flip each image horizontally, would that be a useful way to "double" your training set size?)

-

explore better optimization (can you find ways to make GD converge faster? find ways to avoid tuning step sizes as much?)

What goes in your report PDF for Problem 3

In your writeup for Problem 3, provide 2-3 paragraphs of concise but complete description of what feature transforms you have tried, why you thought it would work, and what the results were. You should also carefully describe your model fitting and selection process: if you tuned the \(\alpha\) penalty parameter, how did you do that? if you performed any automatic selection of features, how did you do it?

Include at least two figures displaying the results of your experiment. For example, you might use one figure to show a ROC curve for the best "original" features model, as well as an improved ROC curve using your chosen new feature transformations. You then might use another figure to show how specific examples were classified by the "original" features model and the "improved" features models (ideally, you should both examples where predictions improved, and ones where predictions got worse).

You'll be evaluated much more on your PROCESS than on your results. A well-designed experiment and careful evaluation will earn more points than getting to a perfect error rate without an ability to justify what you've done.

Your entire writeup for Problem 3 should be somewhere between half a page and 2 total pages, including all figures.

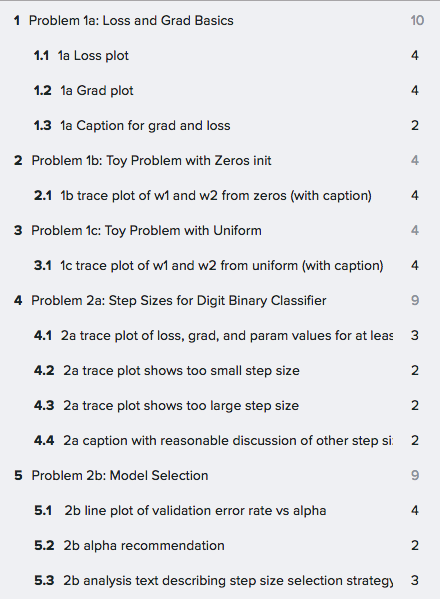

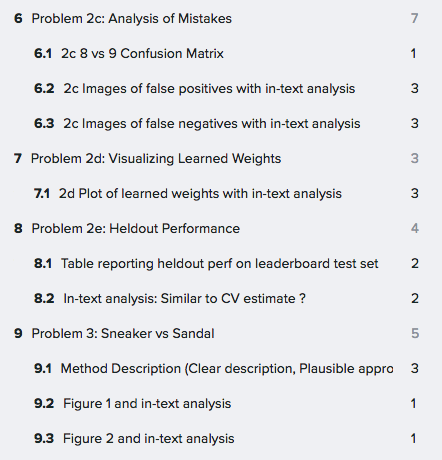

Rubric for Evaluating PDF Report

This should match the rubric outline on Gradescope for the PDF report. If for any reason there is a conflict, the official problem weights on Gradescope will be used.